Software

Services

Training & Support

AI Governance as a Management System: The Complete Guide for 2025

December 01, 2025 6 min read

Introduction: Why AI Governance Matters More Than Ever

AI adoption is accelerating across every industry. Employees are using generative AI in their daily workflows, vendors are embedding AI into SaaS platforms, and entire business processes are being redesigned around automation and decision assistance.

But governance has not kept pace.

Organizations now face a critical question:

How do we harness AI for value—while staying compliant, ethical, and trusted?

The answer lies in a structured, integrated approach:

AI Governance as a Management System.

This pillar page explores the core components of a modern AI governance program, aligned with ISO/IEC 42001:2023, the EU AI Act, NIST AI RMF, and emerging Canadian regulations. It also shows how organizations can embed governance into enterprise architecture and management systems—what we call Management System 4.0.

Table of Contents

- What Is AI Governance?

- Why Treat AI Governance as a Management System

- ISO/IEC 42001 and the Future of AI Management Systems

- Building the AI Operating Model: Roles, Ownership, Accountability

- AI Lifecycle Controls Based on NIST AI RMF

- Shadow AI and the Rise of Everyday AI Tools

- Vendor, Third-Party, and Supply-Chain Governance

- The Regulatory Landscape: EU AI Act, AIDA, and Provincial AI Frameworks

- AI Monitoring, Surveillance, and Psychological Safety

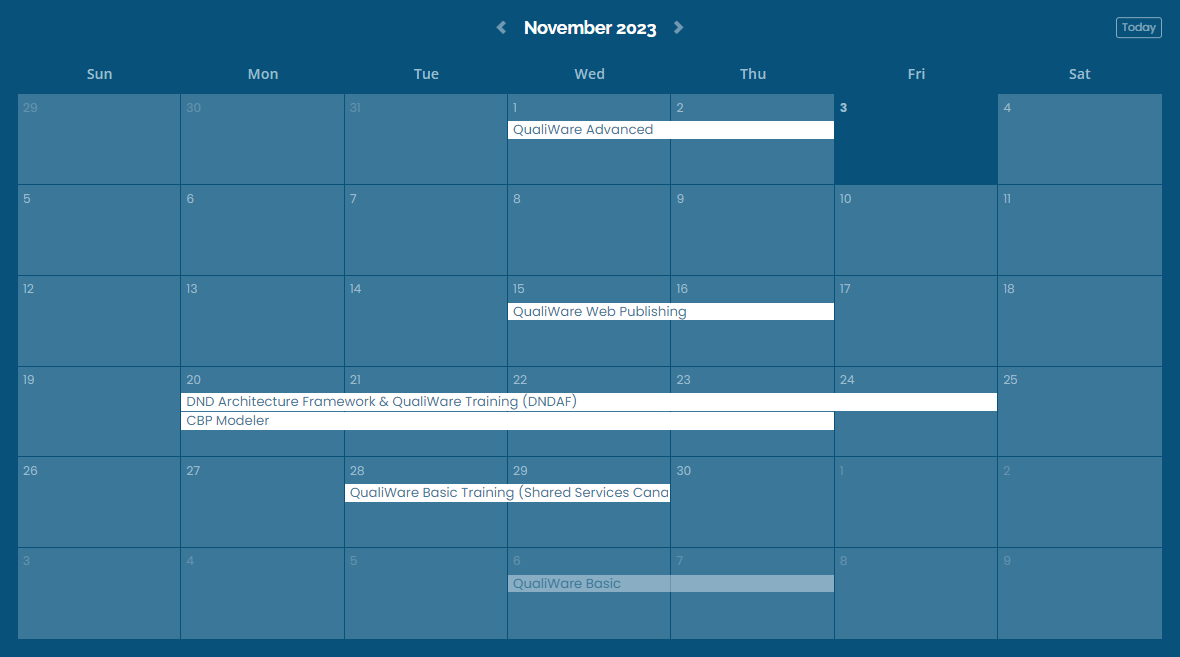

- Skills, Training, and AI Literacy

- Knowledge Governance & Change Management

- Metrics, Maturity, and Continuous Assurance

- How Enterprise Architecture and QualiWare Support AIMS

- Conclusion: The Road to Trustworthy AI

1. What Is AI Governance?

AI governance is the system of policies, processes, roles, and controls an organization uses to ensure AI is:

- Safe

- Fair

- Transparent

- Compliant

- Accountable

- Effective

Modern AI governance goes beyond risk mitigation. It enables organizations to adopt AI confidently, consistently, and in a way that strengthens trust across the enterprise.

2. Why Treat AI Governance as a Management System

Many organizations still view AI governance as:

- A set of policies

- A compliance checklist

- A technical security discipline

- A risk assessment exercise

This narrow view leads to siloed, inconsistent adoption—and leaves major gaps.

A stronger approach is to treat AI governance as a Management System:

- Structured

- Repeatable

- Auditable

- Integrated with business processes

- Owned across the organization

- Continuously improving

This aligns with Management System 4.0—a connected, digital-first approach that integrates EA, risk, compliance, and operations into one living system.

3. ISO/IEC 42001: The Global Standard for AI Management Systems

Released in 2023, ISO/IEC 42001 is the world’s first AI-specific management system standard. It follows the same structure as ISO 9001 and ISO 27001, making it familiar to organizations already operating multiple management systems.

ISO 42001 requires organizations to establish controls for:

AI Risk Assessment & Treatment

Understanding and mitigating harm, bias, safety issues, and misuse.

AI Lifecycle Control

Managing AI from concept → design → development → deployment → monitoring → retirement.

Third-Party AI Oversight

Ensuring vendors, cloud providers, and SaaS tools follow governance requirements.

Documentation and Traceability

Keeping records that demonstrate compliance to auditors, regulators, and stakeholders.

Integration with Existing Systems

ISO explicitly encourages organizations to extend their existing governance structures, not create new silos.

4. Building the AI Operating Model: Roles, Ownership, Accountability

Organizations struggle when AI ownership is unclear. AI governance requires a defined operating model that clarifies:

Who Owns AI Risk?

Common owners include the CRO, CIO, CISO, CDO, or a dedicated CAIO.

Who Approves High-Risk AI?

Often: Legal, HR, Ethics Office, Data Governance teams, or Works Councils.

Who Is the “Provider” vs “Deployer”?

A key distinction in the EU AI Act:

- Providers build or supply AI

- Deployers use AI internally

AI Councils and Review Boards

Many organizations establish:

- AI Governance Councils

- AI Risk Review Boards

- Responsible AI Committees

These bodies define decision rights, escalation paths, and governance boundaries.

5. AI Lifecycle Controls Based on the NIST AI RMF

The NIST AI Risk Management Framework gives organizations a practical way to structure lifecycle controls across four phases:

MAP: Understand Context and Risk

- Impact assessments

- Use case classification

- Data quality assessment

MEASURE: Test, Validate, Evaluate

- TEVV processes

- Bias and fairness testing

- Robustness and cybersecurity testing

MANAGE: Mitigate and Control

- Human-in-the-loop design

- Override protocols

- Model documentation and versioning

GOVERN: Sustain and Improve

- Continuous monitoring

- Drift detection

- Incident response

- Periodic reviews

The goal: AI systems must be safe not only on day one, but every day they operate.

6. Shadow AI and the Rise of Everyday AI Tools

Employees are using AI in ways organizations cannot see:

- Public GenAI platforms (ChatGPT, Gemini, Claude)

- AI-enabled SaaS features (CRM, ticketing, email)

- Low-code automations and bots

- Browser extensions

This creates risks around:

- Data leakage

- Privacy non-compliance

- IP exposure

- Unapproved automated decision-making

Leading organizations respond by creating:

AI Usage Tiers

Green – safe, low-risk uses (summaries, brainstorming)

Amber – conditional uses (internal data, low-sensitivity content)

Red – prohibited uses (HR data, customer data, confidential IP)

Registration & Approval for Internal Automations

Citizen developers can innovate—but safely and visibly.

Governance Without Surveillance

Policies that guide usage rather than punish curiosity.

7. Vendor, Third-Party, and Supply-Chain Governance

Most organizations will buy more AI than they will build.

Effective AI governance must therefore extend to vendors:

Requirements for AI Vendors

- Technical documentation

- Model cards and testing results

- Conformity assessments (EU AI Act)

- Cybersecurity assurance

- Incident reporting obligations

- Audit rights

Updated Procurement Language

- AI transparency requirements

- Prohibited uses

- Bias and safety testing expectations

Risk Tiering for AI Vendors

High-impact vendors require deeper due diligence and more frequent reviews.

8. The Regulatory Landscape: EU AI Act, AIDA, and Provincial Frameworks

Organizations face a growing patchwork of rules:

EU AI Act

The world’s most comprehensive AI regulation, covering:

- High-risk systems

- Transparency duties

- Monitoring obligations

- Safety requirements

- Governance documentation

Canada’s Artificial Intelligence and Data Act (AIDA)

Paused in early 2025, but expected to return with revised language.

Federal Instrumentation

- TBS Directive on Automated Decision-Making

- Government of Canada AI Strategy

Provincial Developments

Ontario’s Trustworthy AI Framework signals emerging expectations across provinces.

The challenge: Build one program that can scale across jurisdictions.

9. AI Monitoring, Surveillance, and Psychological Safety

AI-powered monitoring tools can track:

- Productivity

- Keystrokes

- Communication patterns

- Sentiment

- Performance indicators

These capabilities raise ethical, legal, and labour concerns.

Leading organizations adopt:

Clear Boundaries

Where AI monitoring is acceptable vs prohibited.

Consultation Requirements

Especially where unions, Works Councils, or labour legislation apply.

Contestability Mechanisms

Employees must be able to challenge and understand algorithmic decisions.

Transparent Communication

Trust increases when employees understand why tools are deployed and how they are governed.

10. Skills, Training, and AI Literacy

AI governance succeeds only when employees understand:

- How AI works

- What risks it introduces

- Their role in AI safety

- How to escalate concerns

- How to use AI responsibly

Organizations now develop differentiated training for:

- Boards & executives

- Data, product, and engineering teams

- Front-line employees

- Risk, audit, and compliance functions

AI literacy will soon become as foundational as cybersecurity awareness.

11. Knowledge Governance and Change Management

Governance fails when documentation becomes disconnected from reality.

Organizations must govern knowledge, not just technology:

Key Practices

- Define who can create or change AI policies

- Keep content aligned with live processes and system behaviour

- Explain AI decisions in plain language

- Build content inside integrated EA/GRC platforms (e.g., QualiWare)

- Avoid “compliance theatre” where documents exist but nothing changes

The goal is clarity, not complexity.

12. Metrics, Maturity, and Continuous Assurance

Tracking governance effectiveness requires more than a few KPIs.

Organizations now adopt:

AI Governance Maturity Models

From ad-hoc → repeatable → defined → managed → optimized.

Continuous Assurance

Internal audit, external assessments, and—soon—ISO/IEC 42001 certification.

Live Dashboards

Pulled directly from:

- AI inventory systems

- Approval workflows

- Risk assessments

- Monitoring tools

- Incident management systems

- Training completion data

AI governance becomes real-time, not annual.

13. How Enterprise Architecture and QualiWare Support AIMS

To run AI governance as a management system, organizations need:

- Complete visibility into processes and systems

- Connections between AI assets and business operations

- Change management workflows

- Risk and control integration

- Centralized content and versioning

- Audit-ready documentation

This is exactly where EA platforms like QualiWare excel.

With QualiWare, organizations can:

- Map AI use cases to workflows, roles, risks, and controls

- Maintain a central AI register

- Automate approval workflows

- Link AI lifecycle steps to governance artefacts

- Build dashboards for compliance reporting

- Embed AIMS directly into Management System 4.0

Conclusion: The Road to Trustworthy AI

AI is not just a technology shift—it is a governance shift.

Organizations that succeed will be those that:

- Integrate AI governance into their existing management systems

- Clarify ownership and decision-making

- Govern the full AI lifecycle

- Balance innovation with accountability

- Build AI literacy across the organization

- Use enterprise architecture and modern GRC tools as the backbone of AIMS

AI governance is now a business capability, not a compliance checkbox.

And the organizations that build it intentionally will gain a long-term advantage—in trust, in efficiency, and in confidence to innovate.

Ready to Build an AI Governance Program That Works?

CloseReach helps organizations move from AI uncertainty to AI confidence by integrating governance, enterprise architecture, and compliance into one unified ecosystem.

Whether you're exploring ISO/IEC 42001, preparing for the EU AI Act, or building a practical, business-aligned AI governance model, our team can help.

Book a discovery session to see how AI governance fits into your Management System strategy.

Leave a comment

Comments will be approved before showing up.